Overview

This project aims to create a machine learning model called Car Power and make predictions on the power factor using BentoML (“a framework for building reliable, scalable and cost-efficient AI applications.”) and a free dataset called car_dataset.

Code Repository: https://github.com/Esteban-Quevedo/carpf_model

Repository Content

Understanding and Analyzing the Data

The first resource is a Jupyter Notebook that contains a code that provides a comprehensive analysis of the dataset, including data loading, exploration, visualization, and preprocessing. The scatter plots are particularly useful for understanding the relationships between different variables in the dataset. The data preprocessing steps aim to filter the data based on certain conditions and remove unnecessary columns.

Code: https://github.com/Esteban-Quevedo/carpf_model/tree/main/Dataset_Analysis

This Jupyter Notebook performs the following tasks:

- Data Loading and Exploration:

- Loads a dataset named 'car_dataset.csv' into a Pandas DataFrame called df.

- Displays the first few rows of the dataset using df.head().

- Provides information about the dataset using df.info().

- Describes the statistical summary of the numerical columns using df.describe().

- Checks for missing values using df.isna().sum().

- Data Visualization:

- Creates histograms for each numerical column using df.hist().

- Generates scatter plots to visualize relationships between selected pairs of variables (Engine_size vs Power_perf_factor, Fuel_efficiency vs Power_perf_factor, Price_in_thousands vs Power_perf_factor) using plt.scatter().

- Data Preprocessing:

- Defines two functions (manufacturer_replacement and vehicle_type_replacement) to replace categorical values in the 'Manufacturer' and 'Vehicle_type' columns with numerical values.

- Defines a function (process_data) to perform various data filtering and manipulation steps:

- Replaces categorical values in 'Manufacturer' and 'Vehicle_type' using the defined functions.

- Filters the dataset based on specific conditions for columns like 'Price_in_thousands', 'Engine_size', 'Horsepower', 'Curb_weight', 'Fuel_efficiency', and 'Power_perf_factor'.

- Drops unnecessary columns ('Sales_in_thousands' and 'year_resale_value').

- Visualizing Processed Data:

- Re-runs scatter plots for 'Engine_size vs Power_perf_factor', 'Fuel_efficiency vs Power_perf_factor', and 'Price_in_thousands vs Power_perf_factor' after the data processing step.

Technical requirements:

It is strongly recommended to use a Python virtual environment to isolate the Python environment for your project(either venv or virtualenv).

If you are not already using a virtual environment:

# Create a virtual environment python -m venv myenv # Activate the virtual environment (on Windows) myenv\Scripts\activate # Activate the virtual environment (on macOS/Linux) source myenv/bin/activate # Finally update pip (optional but recommended) pip install --upgrade pip

Required Python Packages:

- pandas

- matplotlib

* Using python 3.9.18 (pandas-2.1.4, and matplotlib-3.8.2)

There is a requirements file in the repo that contains all the required dependencies, but manually you can execute this:

# Install Pandas version 2.1.4 pip install pandas==2.1.4 # Install matplotlib version 2.1.4 pip install matplotlib==3.8.2

Serving a ML Model using BentoML

The second resource is a folder that contains all the requirements to create and serve the Car power factor model through BentoML.

Code: https://github.com/Esteban-Quevedo/carpf_model/tree/main/Model_Serving

Steps to follow:

- Activate the Python Virtual Environment that do you want to use to this project (in my case I used python 3.9.18), you can also use the same environment that we used en the last step.

- locate in the Model_Serving folder.

cd Model_Serving

Here you will find 4 files required for the lab execution and model serving:

- requirements.txt : Contains all the Python Packages required for the execution of the laboratory.

- main.py : This code processes the car dataset, trains a linear regression model, and saves the model using BentoML.

- service.py : This script defines a BentoML service for predicting the power performance factor using a trained model and Pydantic input data.

- bentofile.yaml : Configuration file for BentoML service. - Install the Python requirements using the requirements.txt file:

pip install -r requirements.txt

- Execute the main code.

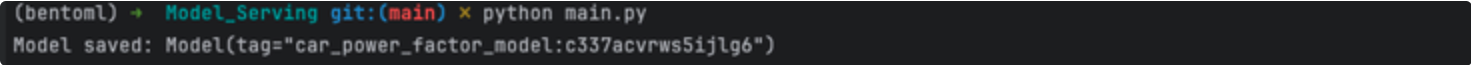

python main.py

You should get a confirmation message like this:

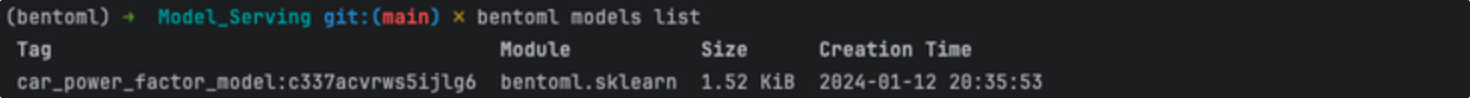

- Check the BentoML model list to confirm that the model is saved.

bentoml models list

You should get a list where the car_power_factor_model is, there you can see the model version, size and creation time, something like this:

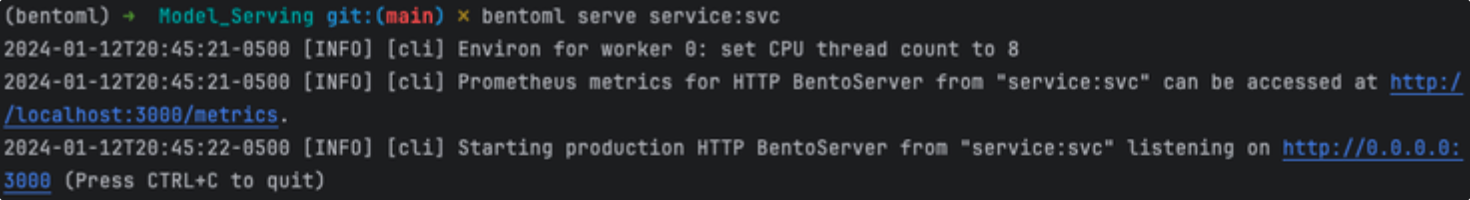

- After that we can proceed with serve the service, to do this run the following command:

bentoml serve service:svc

This command will return a log confirming that the service is up and running, something like this:

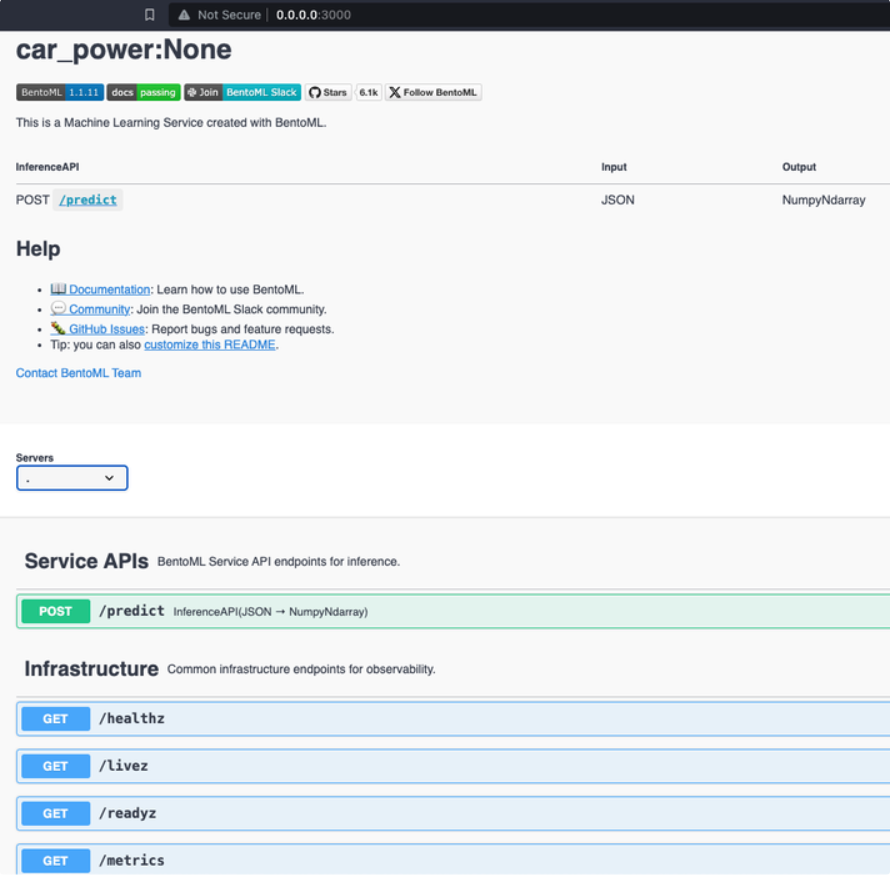

- We are almost done, at this step we can finally test the model service, to do this you have 2 options:

- Using the client HTTP Bento Server which address is displayed in the previous log, in my case is http://0.0.0.0:3000 (Click on the link or paste the URL in your web browser). You should get a dashboard like this:

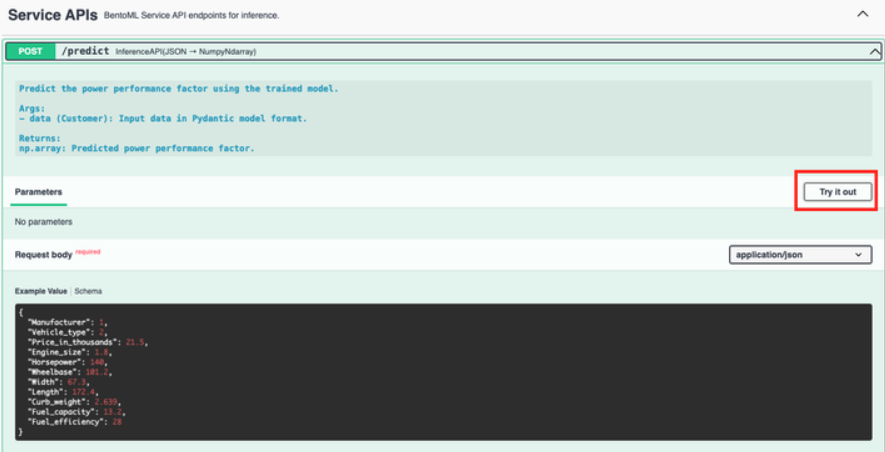

Then, expand the POST option and press the “Try it out” Button:

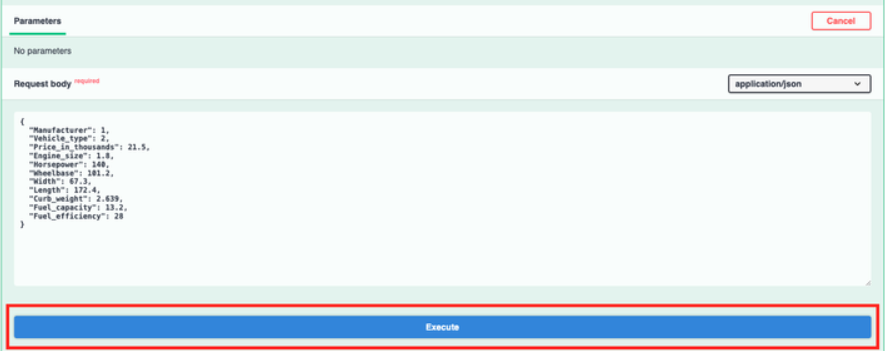

Finally, press the “Execute“ the button:

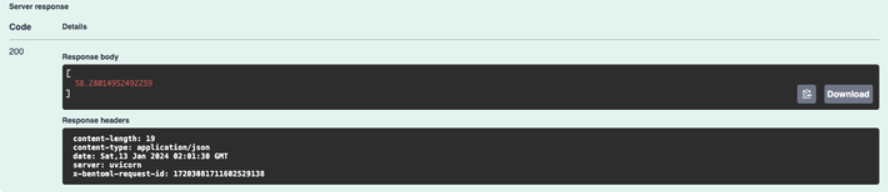

And there it is !!! Our prediction test will return a 200 code response with the Power per Factor prediction tho the input values.

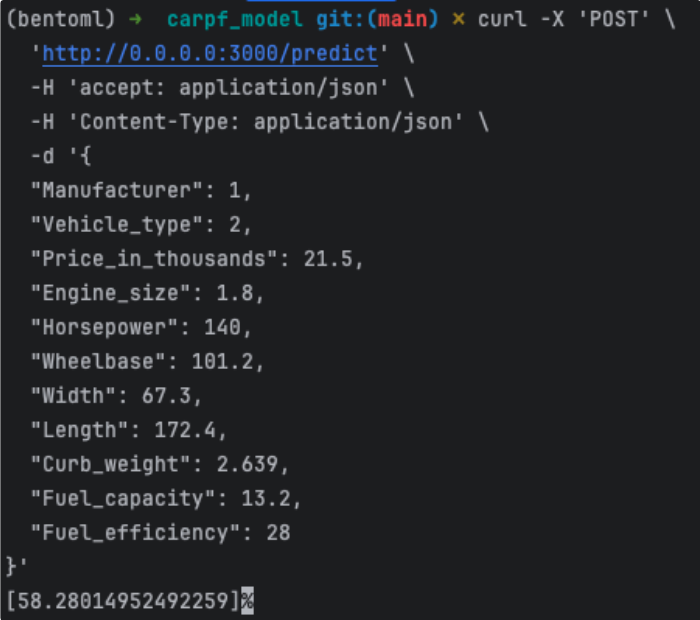

- On the other hand, we can execute the curl command in our terminal, like this:

- Using the client HTTP Bento Server which address is displayed in the previous log, in my case is http://0.0.0.0:3000 (Click on the link or paste the URL in your web browser). You should get a dashboard like this:

- After running the model, we can observe that both methods returned the same value of 58.28014952492259. This value can be compared with the row in the car_dataset that we used in the example where the Power_perf_factor value is 58.28014952. The comparison shows that the values are very close (a 0.00000000492259 difference ).

Bonus

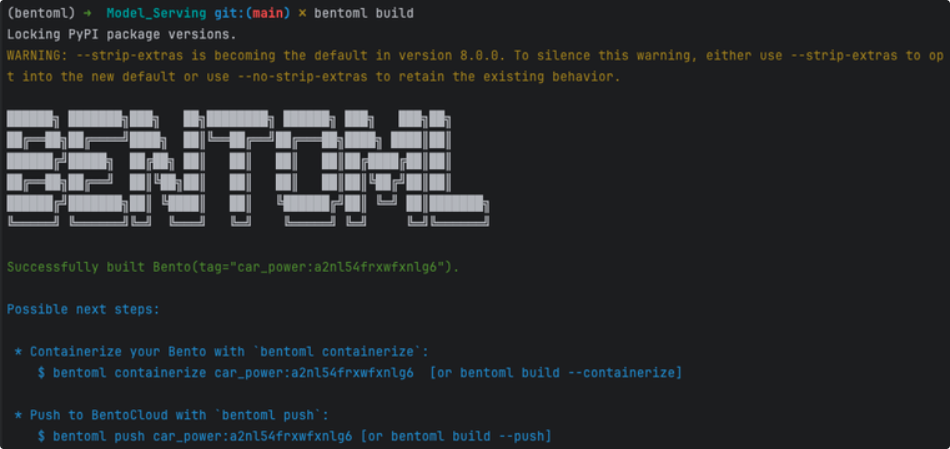

Once the model is working correctly, it can be packaged into the standard distribution format in BentoML, known as a "Bento".

That is the time to the bentofile.yaml file: This file establishes the building options, such as dependencies, Docker image settings, and models. To build the bento just run the build command:

bentoml build

If everything is correct you will receive an output like this:

At this point you will able to Containerize, Push to BentoCloud, and more… But for now lets serve it locally.

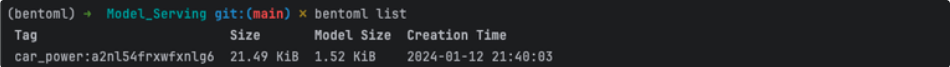

First, lets check our bentos list:

bentoml list

You should get an output like this:

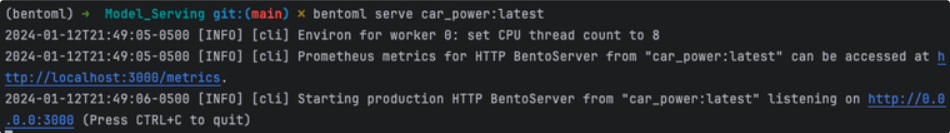

Now, lets serve it locally, to do that run the serve command:

bentoml serve car_power:latest

You should get a serving log output like this:

Now you have the service running again but now serving a bento instead of the service.py script, you also can:

- “Export a Bento in the BentoML Bento Store as a standalone archive file and share it between teams or move it between different deployment stages”.

- “Export Bentos to and import Bentos from external storage devices, such as AWS S3, GCS, FTP and Dropbox”.

And much more, to more info check the BentoML Official Documentation.

Gratitude Section

I hope you have enjoyed and learned a lot during this lab session. In this chapter, we covered essential steps in data analysis, including data loading, filtering, and visualization. Leveraging the power of Pandas and Matplotlib, we explored insightful patterns within the provided dataset.

Following the data analysis phase, we dived into the exciting world of machine learning with BentoML. You learned how to create a predictive model, serve it locally (using a service python script and Bento built), and test its functionality on your machines. BentoML's user-friendly interface made it easy for you to deploy and manage your machine learning models, facilitating a seamless development experience.